By Ammar Khan

Introduction:

Digitalization of the modern world has blessed the 21st century with enormous trust that we can leave our services on autopilot. But, what about the material in the form of records that we collected from the technologies before digitization? Abandon it? Not only does it contain valuable information needed to be archived digitally, but they contain functional data for gaining insights from trends gathered from the past. Let’s pick an example, in my own experience, a small police station in my locale has four storage rooms filled with hand-written archived records. Since most new means of inserting records have been digitized, what do we do about the old records? Leave it as it is? Dump it? From the standpoint of government, both are not feasible. Transforming old records into digital form seems more reasonable. It will prolong the usability of records, save a lot of physical space, and decrease the search time required to find a particular record. For the sake of convenience, we are going to use a shallow network that can be trained over a laptop.

Problem Statement:

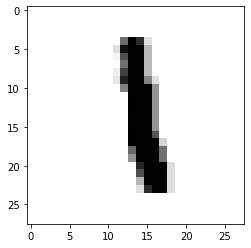

The problem that we are going to solve with Artificial Neural Network is recognizing numeric digits that are hand-written. The data set we chose to solve this is the MNIST Handwritten-Digits data set. The data set contains 60,000 training examples from 250 different writers. It contains 10,000 test examples for the model to evaluate it. An example from the data set is given below.

Methodology:

The framework used to train ANN is Keras. Every pixel of all the images were normalized between the range of 0 and 1. The 2-D image matrices were then transformed to 1-D linear vectors. The classes of 10 digits, i-e from 0-9, were encoded using One Hot Encoding. For modeling the layers, we have opted a short and easily trainable neural network that does not require clusters to complete in a given time. These layers are:

· Dense layer with 784 neurons, activation is relu (input layer).

· Dense layer with 256 neurons, activation is relu.

· Dense layer with 64 neurons, activation is sigmoid.

· Dense layer with 10 neurons, activation is softmax (output layer).

Training Hyper Parameters:

optimizer: RMSprop

loss function: binary_crossentropy

learning rate= 2e-5

epochs: 30

batch size: 20

validation set : 20%

Results:

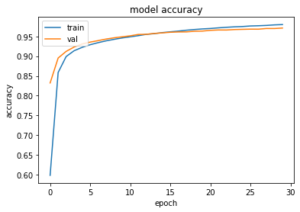

The model was neither over fitting, nor under fitting, as shown in the figure below:

The accuracy of the model for test set was 97.11%, which is excellent for a shallow neural network.

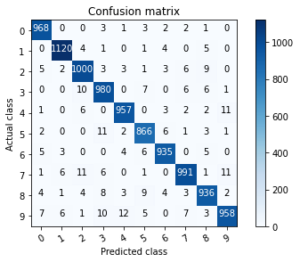

The confusion-matrix for all test set is shown below:

Conclusion:

This example shows the capability of Deep Learning, in the field of image recognition, for tasks that are tedious in-terms of labor force. Though we might not want to manually process archives, we can train a model and let it do the work. If we want to make it more streamlined and develop a workflow, we can add pipelines with a Deep Learning model attached on each decision path. Let’s say, one’s job is to draw a bounding box on things you want to process. Then, these bounded boxes will be sent to other Deep Learning models that will process the object detected in the bounding box and the final one will process on a single category only. Why make it tedious like that, one may ask? Training a larger network that can do all of these jobs may require an extremely painful process and designing the network will become more confusing because one layer might drop inputs required for another. Each model can be attached and optimized for its own duty. That is more clean, trainable, workable, and manageable.